双层神经网络

我们选用ReLU函数和softmax函数:

步骤:

1、LOSS损失函数(前向传播)与梯度(后向传播)计算

Forward: 计算score,再根据score计算loss

Backward:分别对W2、b2、W1、b1求梯度

def loss(self, X, y=None, reg=0.0):

# Unpack variables from the params dictionary

W1, b1 = self.params['W1'], self.params['b1']

W2, b2 = self.params['W2'], self.params['b2']

N, D = X.shape

# Compute the forward pass

scores = None

h1 = np.maximum(0, np.dot(X,W1) + b1) #(5,10)

scores = np.dot(h1,W2) + b2 # (5,3)

if y is None:

return scores

# Compute the loss

loss = None

exp_S = np.exp(scores) #(5,3)

sum_exp_S = np.sum(exp_S,axis = 1)

sum_exp_S = sum_exp_S.reshape(-1,1) #(5,1)

#print (sum_exp_S.shape)

loss = np.sum(-scores[range(N),list(y)]) + sum(np.log(sum_exp_S))

loss = loss / N + 0.5 * reg * np.sum(W1 * W1) + 0.5 * reg * np.sum(W2 * W2)

# Backward pass: compute gradients

grads = {}

#---------------------------------#

dscores = np.zeros(scores.shape)

dscores[range(N),list(y)] = -1

dscores += (exp_S/sum_exp_S) #(5,3)

dscores /= N

grads['W2'] = np.dot(h1.T, dscores)

grads['W2'] += reg * W2

grads['b2'] = np.sum(dscores, axis = 0)

#---------------------------------#

dh1 = np.dot(dscores, W2.T) #(5,10)

dh1_ReLU = (h1>0) * dh1

grads['W1'] = X.T.dot(dh1_ReLU) + reg * W1

grads['b1'] = np.sum(dh1_ReLU, axis = 0)

#---------------------------------#

return loss, grads

2、训练函数 (迭代过程:forward–>backward–>update–>forward–>backward->update……)

def train(self, X, y, X_val, y_val,

learning_rate=1e-3, learning_rate_decay=0.95,

reg=5e-6, num_iters=100,

batch_size=200, verbose=False):

num_train = X.shape[0]

iterations_per_epoch = max(num_train / batch_size, 1)

# Use SGD to optimize the parameters in self.model

loss_history = []

train_acc_history = []

val_acc_history = []

for it in xrange(num_iters):

X_batch = None

y_batch = None

mask = np.random.choice(num_train,batch_size,replace = True)

X_batch = X[mask]

y_batch = y[mask]

# Compute loss and gradients using the current minibatch

loss, grads = self.loss(X_batch, y=y_batch, reg=reg)

loss_history.append(loss)

self.params['W1'] += -learning_rate * grads['W1']

self.params['b1'] += -learning_rate * grads['b1']

self.params['W2'] += -learning_rate * grads['W2']

self.params['b2'] += -learning_rate * grads['b2']

if verbose and it % 100 == 0:

print('iteration %d / %d: loss %f' % (it, num_iters, loss))

# Every epoch, check train and val accuracy and decay learning rate.

if it % iterations_per_epoch == 0:

# Check accuracy

#print ('第%d个epoch' %it)

train_acc = (self.predict(X_batch) == y_batch).mean()

val_acc = (self.predict(X_val) == y_val).mean()

train_acc_history.append(train_acc)

val_acc_history.append(val_acc)

# Decay learning rate

learning_rate *= learning_rate_decay #减小学习率

return {

'loss_history': loss_history,

'train_acc_history': train_acc_history,

'val_acc_history': val_acc_history,

}

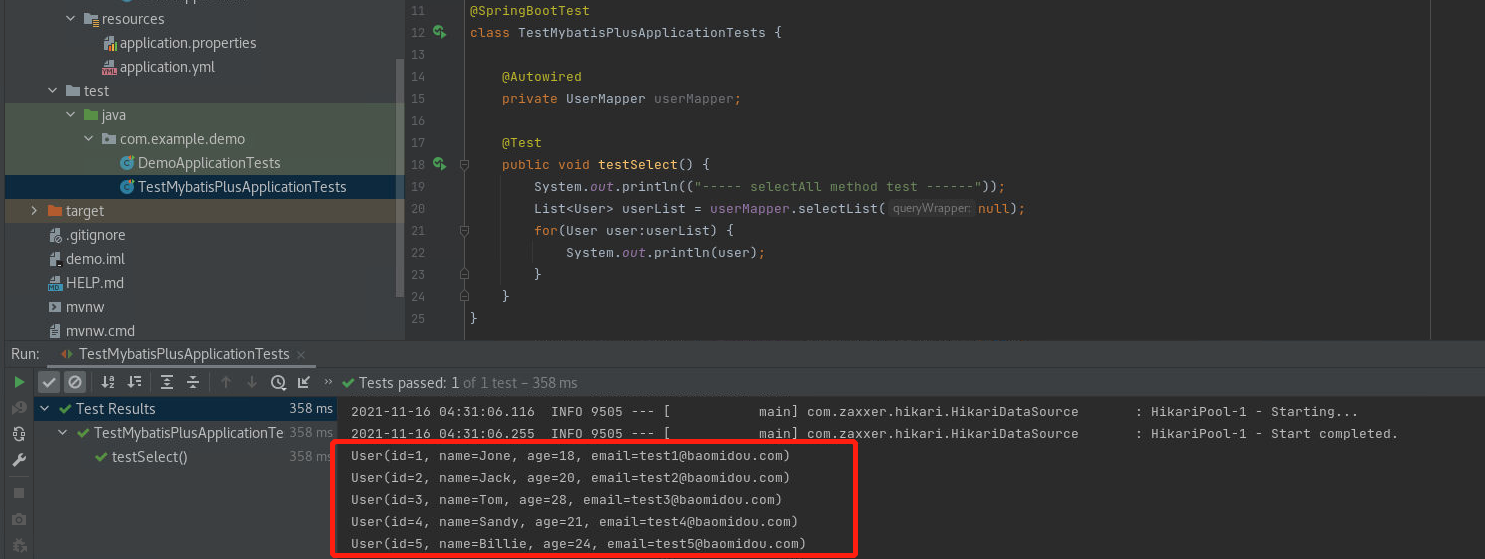

3、预测函数

4、参数训练

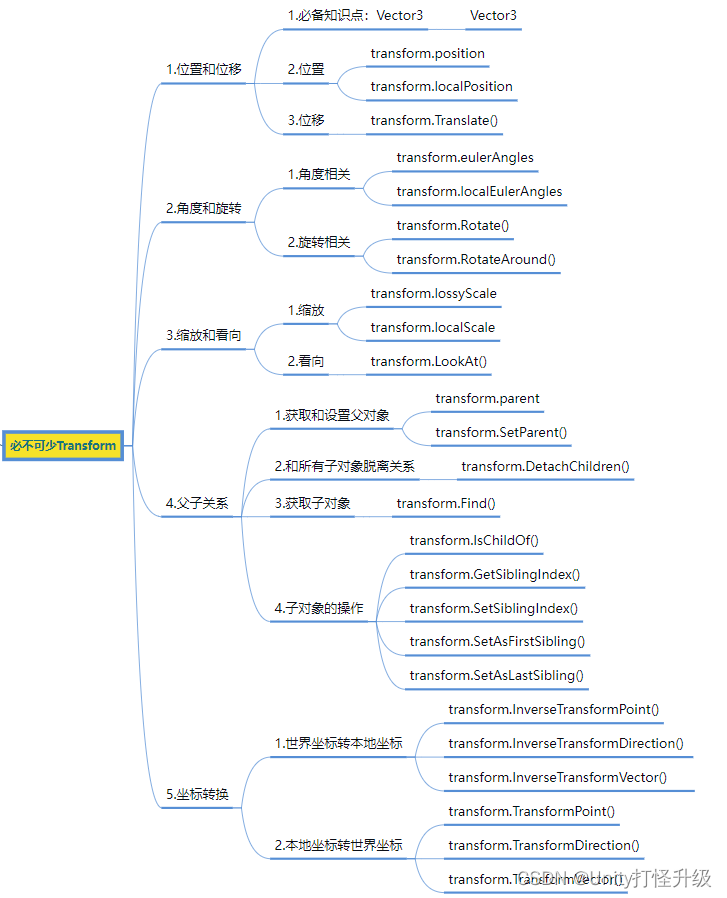

用于机器视觉识别的卷积神经网络

多层全连接神经网络

两个基本的layer:

def affine_forward(x, w, b):

out = None

N=x.shape[0]

x_new=x.reshape(N,-1)#转为二维向量

out=np.dot(x_new,w)+b

cache = (x, w, b) # 不需要保存out

return out, cache

def affine_backward(dout, cache):

x, w, b = cache

dx, dw, db = None, None, None

dx=np.dot(dout,w.T)

dx=np.reshape(dx,x.shape)

x_new=x.reshape(x.shape[0],-1)

dw=np.dot(x_new.T,dout)

db=np.sum(dout,axis=0,keepdims=True)

return dx, dw, db

def relu_forward(x):

out = None

out=np.maximum(0,x)

cache = x

return out, cache

def relu_backward(dout, cache):

dx, x = None, cache

return dx

构建一个Sandwich的层:

def affine_relu_forward(x, w, b):

a, fc_cache = affine_forward(x, w, b)

out, relu_cache = relu_forward(a)

cache = (fc_cache, relu_cache)

return out, cache

def affine_relu_backward(dout, cache):

fc_cache, relu_cache = cache

da = relu_backward(dout, relu_cache)

dx, dw, db = affine_backward(da, fc_cache)

return dx, dw, db

FullyConnectedNet:

class FullyConnectedNet(object):

def __init__(self, hidden_dims, input_dim=3*32*32, num_classes=10,

dropout=0, use_batchnorm=False, reg=0.0,

weight_scale=1e-2, dtype=np.float32, seed=None):

self.use_batchnorm = use_batchnorm

self.use_dropout = dropout > 0

self.reg = reg

self.num_layers = 1 + len(hidden_dims)

self.dtype = dtype

self.params = {}

layers_dims = [input_dim] + hidden_dims + [num_classes] #z这里存储的是每个layer的大小

for i in xrange(self.num_layers):

self.params['W' + str(i + 1)] = weight_scale * np.random.randn(layers_dims[i], layers_dims[i + 1])

self.params['b' + str(i + 1)] = np.zeros((1, layers_dims[i + 1]))

if self.use_batchnorm and i < len(hidden_dims):#最后一层是不需要batchnorm的

self.params['gamma' + str(i + 1)] = np.ones((1, layers_dims[i + 1]))

self.params['beta' + str(i + 1)] = np.zeros((1, layers_dims[i + 1]))

self.dropout_param = {}

if self.use_dropout:

self.dropout_param = {'mode': 'train', 'p': dropout}

if seed is not None:

self.dropout_param['seed'] = seed

self.bn_params = []

if self.use_batchnorm:

self.bn_params = [{'mode': 'train'} for i in xrange(self.num_layers - 1)]

# Cast all parameters to the correct datatype

for k, v in self.params.iteritems():

self.params[k] = v.astype(dtype)

def loss(self, X, y=None):

X = X.astype(self.dtype)

mode = 'test' if y is None else 'train'

if self.dropout_param is not None:

self.dropout_param['mode'] = mode

if self.use_batchnorm:

for bn_param in self.bn_params:

bn_param[mode] = mode

scores = None

h, cache1, cache2, cache3,cache4, bn, out = {}, {}, {}, {}, {}, {},{}

out[0] = X #存储每一层的out,按照逻辑,X就是out0[0]

# Forward pass: compute loss

for i in xrange(self.num_layers - 1):

# 得到每一层的参数

w, b = self.params['W' + str(i + 1)], self.params['b' + str(i + 1)]

if self.use_batchnorm:

gamma, beta = self.params['gamma' + str(i + 1)], self.params['beta' + str(i + 1)]

h[i], cache1[i] = affine_forward(out[i], w, b)

bn[i], cache2[i] = batchnorm_forward(h[i], gamma, beta, self.bn_params[i])

out[i + 1], cache3[i] = relu_forward(bn[i])

if self.use_dropout:

out[i+1], cache4[i] = dropout_forward(out[i+1] , self.dropout_param)

else:

out[i + 1], cache3[i] = affine_relu_forward(out[i], w, b)

if self.use_dropout:

out[i + 1], cache4[i] = dropout_forward(out[i + 1], self.dropout_param)

W, b = self.params['W' + str(self.num_layers)], self.params['b' + str(self.num_layers)]

scores, cache = affine_forward(out[self.num_layers - 1], W, b) #对最后一层进行计算

if mode == 'test':

return scores

loss, grads = 0.0, {}

data_loss, dscores = softmax_loss(scores, y)

reg_loss = 0

for i in xrange(self.num_layers):

reg_loss += 0.5 * self.reg * np.sum(self.params['W' + str(i + 1)] * self.params['W' + str(i + 1)])

loss = data_loss + reg_loss

# Backward pass: compute gradients

dout, dbn, dh, ddrop = {}, {}, {}, {}

t = self.num_layers - 1

dout[t], grads['W' + str(t + 1)], grads['b' + str(t + 1)] = affine_backward(dscores, cache)#这个cache就是上面得到的

for i in xrange(t):

if self.use_batchnorm:

if self.use_dropout:

dout[t - i] = dropout_backward(dout[t-i], cache4[t-1-i])

dbn[t - 1 - i] = relu_backward(dout[t - i], cache3[t - 1 - i])

dh[t - 1 - i], grads['gamma' + str(t - i)], grads['beta' + str(t - i)] = batchnorm_backward(dbn[t - 1 - i],cache2[t - 1 - i])

dout[t - 1 - i], grads['W' + str(t - i)], grads['b' + str(t - i)] = affine_backward(dh[t - 1 - i],cache1[t - 1 - i])

else:

if self.use_dropout:

dout[t - i] = dropout_backward(dout[t - i], cache4[t - 1 - i])

dout[t - 1 - i], grads['W' + str(t - i)], grads['b' + str(t - i)] = affine_relu_backward(dout[t - i],cache3[t - 1 - i])

# Add the regularization gradient contribution

for i in xrange(self.num_layers):

grads['W' + str(i + 1)] += self.reg * self.params['W' + str(i + 1)]

return loss, grads

使用slover来对神经网络进优化求解

之后进行参数更新:

- SGD

- Momentum

- Nestero

- RMSProp and Adam

批量规范化

BN层前向传播:

BN层反向传播:

def batchnorm_forward(x, gamma, beta, bn_param):

mode = bn_param['mode'] #因为train和test是两种不同的方法

eps = bn_param.get('eps', 1e-5)

momentum = bn_param.get('momentum', 0.9)

N, D = x.shape

running_mean = bn_param.get('running_mean', np.zeros(D, dtype=x.dtype))

running_var = bn_param.get('running_var', np.zeros(D, dtype=x.dtype))

out, cache = None, None

if mode == 'train':

sample_mean = np.mean(x, axis=0, keepdims=True) # [1,D]

sample_var = np.var(x, axis=0, keepdims=True) # [1,D]

x_normalized = (x - sample_mean) / np.sqrt(sample_var + eps) # [N,D]

out = gamma * x_normalized + beta

cache = (x_normalized, gamma, beta, sample_mean, sample_var, x, eps)

running_mean = momentum * running_mean + (1 - momentum) * sample_mean #通过moument得到最终的running_mean和running_var

running_var = momentum * running_var + (1 - momentum) * sample_var

elif mode == 'test':

x_normalized = (x - running_mean) / np.sqrt(running_var + eps) #test的时候如何通过BN层

out = gamma * x_normalized + beta

else:

raise ValueError('Invalid forward batchnorm mode "%s"' % mode)

# Store the updated running means back into bn_param

bn_param['running_mean'] = running_mean

bn_param['running_var'] = running_var

return out, cache

def batchnorm_backward(dout, cache):

dx, dgamma, dbeta = None, None, None

x_normalized, gamma, beta, sample_mean, sample_var, x, eps = cache

N, D = x.shape

dx_normalized = dout * gamma # [N,D]

x_mu = x - sample_mean # [N,D]

sample_std_inv = 1.0 / np.sqrt(sample_var + eps) # [1,D]

dsample_var = -0.5 * np.sum(dx_normalized * x_mu, axis=0, keepdims=True) * sample_std_inv**3

dsample_mean = -1.0 * np.sum(dx_normalized * sample_std_inv, axis=0, keepdims=True) - \

2.0 * dsample_var * np.mean(x_mu, axis=0, keepdims=True)

dx1 = dx_normalized * sample_std_inv

dx2 = 2.0/N * dsample_var * x_mu

dx = dx1 + dx2 + 1.0/N * dsample_mean

dgamma = np.sum(dout * x_normalized, axis=0, keepdims=True)

dbeta = np.sum(dout, axis=0, keepdims=True)

return dx, dgamma, dbeta

Batch Normalization解决的一个重要问题就是梯度饱和。

Dropout

训练的时候以一定的概率来去每层的神经元:

可以防止过拟合。还可以理解为dropout是一个正则化的操作,他在每次训练的时候,强行让一些feature为0,这样提高了网络的稀疏表达能力。

def dropout_forward(x, dropout_param):

p, mode = dropout_param['p'], dropout_param['mode']

if 'seed' in dropout_param:

np.random.seed(dropout_param['seed'])

mask = None

out = None

if mode == 'train':

mask = (np.random.rand(*x.shape) < p) / p #注意这里除以了一个P,这样在test的输出的时候,维持原样即可

out = x * mask

elif mode == 'test':

out = x

cache = (dropout_param, mask)

out = out.astype(x.dtype, copy=False)

return out, cache

def dropout_backward(dout, cache):

dropout_param, mask = cache

mode = dropout_param['mode']

dx = None

if mode == 'train':

dx = dout * mask

elif mode == 'test':

dx = dout

return dx

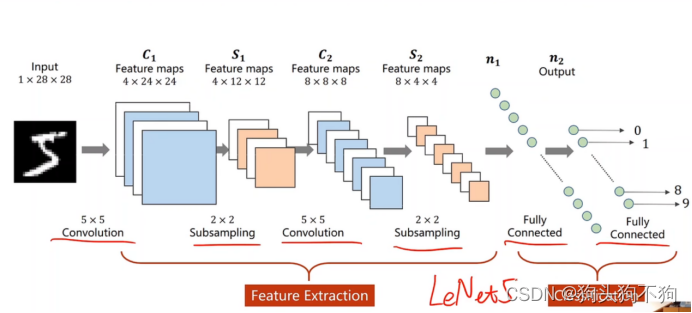

卷积神经网络

卷积层的前向传播与反向传播

def conv_forward_naive(x, w, b, conv_param):

stride, pad = conv_param['stride'], conv_param['pad']

N, C, H, W = x.shape

F, C, HH, WW = w.shape

x_padded = np.pad(x, ((0, 0), (0, 0), (pad, pad), (pad, pad)), mode='constant') #补零

H_new = 1 + (H + 2 * pad - HH) / stride

W_new = 1 + (W + 2 * pad - WW) / stride

s = stride

out = np.zeros((N, F, H_new, W_new))

for i in xrange(N): # ith image

for f in xrange(F): # fth filter

for j in xrange(H_new):

for k in xrange(W_new):

out[i, f, j, k] = np.sum(x_padded[i, :, j*s:HH+j*s, k*s:WW+k*s] * w[f]) + b[f]#对应位相乘

cache = (x, w, b, conv_param)

return out, cache

def conv_backward_naive(dout, cache):

x, w, b, conv_param = cache

pad = conv_param['pad']

stride = conv_param['stride']

F, C, HH, WW = w.shape

N, C, H, W = x.shape

H_new = 1 + (H + 2 * pad - HH) / stride

W_new = 1 + (W + 2 * pad - WW) / stride

dx = np.zeros_like(x)

dw = np.zeros_like(w)

db = np.zeros_like(b)

s = stride

x_padded = np.pad(x, ((0, 0), (0, 0), (pad, pad), (pad, pad)), 'constant')

dx_padded = np.pad(dx, ((0, 0), (0, 0), (pad, pad), (pad, pad)), 'constant')

for i in xrange(N): # ith image

for f in xrange(F): # fth filter

for j in xrange(H_new):

for k in xrange(W_new):

window = x_padded[i, :, j*s:HH+j*s, k*s:WW+k*s]

db[f] += dout[i, f, j, k]

dw[f] += window * dout[i, f, j, k]

dx_padded[i, :, j*s:HH+j*s, k*s:WW+k*s] += w[f] * dout[i, f, j, k]#上面的式子,关键就在于+号

# Unpad

dx = dx_padded[:, :, pad:pad+H, pad:pad+W]

return dx, dw, db

池化层

def max_pool_forward_naive(x, pool_param):

HH, WW = pool_param['pool_height'], pool_param['pool_width']

s = pool_param['stride']

N, C, H, W = x.shape

H_new = 1 + (H - HH) / s

W_new = 1 + (W - WW) / s

out = np.zeros((N, C, H_new, W_new))

for i in xrange(N):

for j in xrange(C):

for k in xrange(H_new):

for l in xrange(W_new):

window = x[i, j, k*s:HH+k*s, l*s:WW+l*s]

out[i, j, k, l] = np.max(window)

cache = (x, pool_param)

return out, cache

def max_pool_backward_naive(dout, cache):

x, pool_param = cache

HH, WW = pool_param['pool_height'], pool_param['pool_width']

s = pool_param['stride']

N, C, H, W = x.shape

H_new = 1 + (H - HH) / s

W_new = 1 + (W - WW) / s

dx = np.zeros_like(x)

for i in xrange(N):

for j in xrange(C):

for k in xrange(H_new):

for l in xrange(W_new):

window = x[i, j, k*s:HH+k*s, l*s:WW+l*s]

m = np.max(window) #获得之前的那个值,这样下面只要windows==m就能得到相应的位置

dx[i, j, k*s:HH+k*s, l*s:WW+l*s] = (window == m) * dout[i, j, k, l]

return dx